Artículo

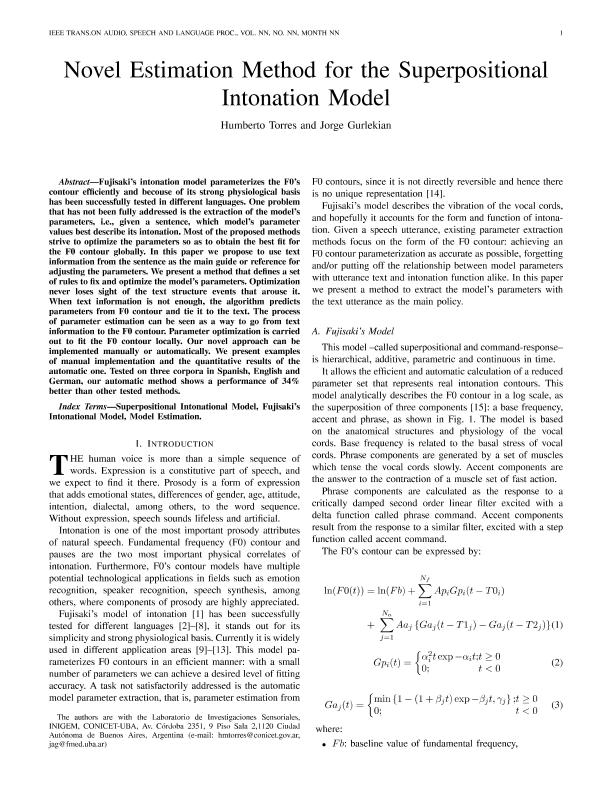

Novel estimation method for the superpositional intonation model

Fecha de publicación:

01/2016

Editorial:

IEEE Signal Procesing Society

Revista:

IEEE/ACM Transactions on Audio, Speech, and Language Processing

ISSN:

2329-9290

e-ISSN:

2329-9304

Idioma:

Inglés

Tipo de recurso:

Artículo publicado

Clasificación temática:

Resumen

Fujisaki’s intonation model parameterizes the F0’s contour efficiently and because of its strong physiological basis has been successfully tested in different languages. One problem that has not been fully addressed is the extraction of the model’s parameters, i.e., given a sentence, which model’s parameter values best describe its intonation. Most of the proposed methods strive to optimize the parameters so as to obtain the best fit for the F0 contour globally. In this paper we propose to use text information from the sentence as the main guide or reference for adjusting the parameters. We present a method that defines a set of rules to fix and optimize the model’s parameters. Optimization never loses sight of the text structure events that arouse it. When text information is not enough, the algorithm predicts parameters from F0 contour and tie them to the text. The process of parameter estimation can be seen as a way to go from text information to the F0 contour. Parameter optimization is carried out to fit the F0 contour locally. Our novel approach can be implemented manually or automatically. We present examples of manual implementation and the quantitative results of the automatic one. Tested on three corpora in Spanish, English and German, our automatic method shows a performance of 34% better than other tested methods.

Archivos asociados

Licencia

Identificadores

Colecciones

Articulos(INIGEM)

Articulos de INSTITUTO DE INMUNOLOGIA, GENETICA Y METABOLISMO

Articulos de INSTITUTO DE INMUNOLOGIA, GENETICA Y METABOLISMO

Citación

Torres, Humberto Maximiliano; Gurlekian, Jorge Alberto; Novel estimation method for the superpositional intonation model; IEEE Signal Procesing Society; IEEE/ACM Transactions on Audio, Speech, and Language Processing; 24; 1; 1-2016; 151-160

Compartir

Altmétricas