Mostrar el registro sencillo del ítem

dc.contributor.author

Arneodo, Ezequiel Matías

dc.contributor.author

Chen, Shukai

dc.contributor.author

Brown, Daril E.

dc.contributor.author

Gilja, Vikash

dc.contributor.author

Gentner, Timothy Q.

dc.date.available

2022-11-25T16:18:37Z

dc.date.issued

2021-08

dc.identifier.citation

Arneodo, Ezequiel Matías; Chen, Shukai; Brown, Daril E.; Gilja, Vikash; Gentner, Timothy Q.; Neurally driven synthesis of learned, complex vocalizations; Cell Press; Current Biology; 31; 15; 8-2021; 3419-3425

dc.identifier.issn

0960-9822

dc.identifier.uri

http://hdl.handle.net/11336/179036

dc.description.abstract

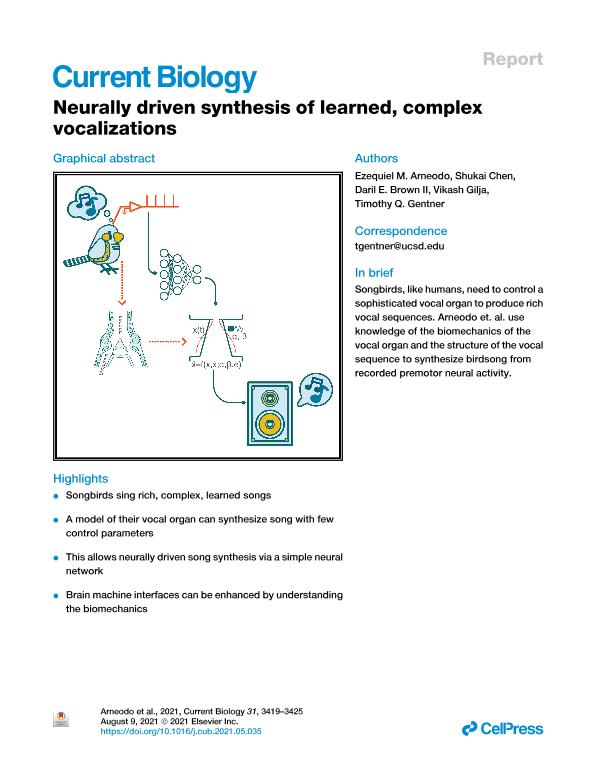

Brain machine interfaces (BMIs) hold promise to restore impaired motor function and serve as powerful tools to study learned motor skill. While limb-based motor prosthetic systems have leveraged nonhuman primates as an important animal model,1–4 speech prostheses lack a similar animal model and are more limited in terms of neural interface technology, brain coverage, and behavioral study design.5–7 Songbirds are an attractive model for learned complex vocal behavior. Birdsong shares a number of unique similarities with human speech,8–10 and its study has yielded general insight into multiple mechanisms and circuits behind learning, execution, and maintenance of vocal motor skill.11–18 In addition, the biomechanics of song production bear similarity to those of humans and some nonhuman primates.19–23 Here, we demonstrate a vocal synthesizer for birdsong, realized by mapping neural population activity recorded from electrode arrays implanted in the premotor nucleus HVC onto low-dimensional compressed representations of song, using simple computational methods that are implementable in real time. Using a generative biomechanical model of the vocal organ (syrinx) as the low-dimensional target for these mappings allows for the synthesis of vocalizations that match the bird's own song. These results provide proof of concept that high-dimensional, complex natural behaviors can be directly synthesized from ongoing neural activity. This may inspire similar approaches to prosthetics in other species by exploiting knowledge of the peripheral systems and the temporal structure of their output.

dc.format

application/pdf

dc.language.iso

eng

dc.publisher

Cell Press

dc.rights

info:eu-repo/semantics/openAccess

dc.rights.uri

https://creativecommons.org/licenses/by-nc-sa/2.5/ar/

dc.subject

BIOPROSTHETICS

dc.subject

BIRDSONG

dc.subject

BRAIN MACHINE INTERFACES

dc.subject

ELECTROPHYSIOLOGY

dc.subject

NEURAL NETWORKS

dc.subject

NONLINEAR DYNAMICS

dc.subject

SPEECH

dc.subject.classification

Otras Ciencias Físicas

dc.subject.classification

Ciencias Físicas

dc.subject.classification

CIENCIAS NATURALES Y EXACTAS

dc.title

Neurally driven synthesis of learned, complex vocalizations

dc.type

info:eu-repo/semantics/article

dc.type

info:ar-repo/semantics/artículo

dc.type

info:eu-repo/semantics/publishedVersion

dc.date.updated

2022-09-20T15:47:23Z

dc.journal.volume

31

dc.journal.number

15

dc.journal.pagination

3419-3425

dc.journal.pais

Estados Unidos

dc.description.fil

Fil: Arneodo, Ezequiel Matías. University of California; Estados Unidos. Consejo Nacional de Investigaciones Científicas y Técnicas. Centro Científico Tecnológico Conicet - La Plata. Instituto de Física La Plata. Universidad Nacional de La Plata. Facultad de Ciencias Exactas. Instituto de Física La Plata; Argentina

dc.description.fil

Fil: Chen, Shukai. University of California; Estados Unidos

dc.description.fil

Fil: Brown, Daril E.. University of California; Estados Unidos

dc.description.fil

Fil: Gilja, Vikash. University of California; Estados Unidos

dc.description.fil

Fil: Gentner, Timothy Q.. The Kavli Institute For Brain And Mind; Estados Unidos. University of California; Estados Unidos

dc.journal.title

Current Biology

dc.relation.alternativeid

info:eu-repo/semantics/altIdentifier/url/https://doi.org/10.1016/j.cub.2021.05.035

dc.relation.alternativeid

info:eu-repo/semantics/altIdentifier/doi/http://dx.doi.org/10.1016/j.cub.2021.05.035

Archivos asociados